The Chinese Room Thought Experiment by John Searle

John Searle introduced the Chinese Room thought experiment in 1980 order to give people a way to picture the difference between what computers are doing and the human mind.

The thought experiment was rendered necessary because many analytic philosophers have promoted CTM – the computer theory of mind. CTM is almost certainly not true. Computers are machines and machines are rule-following devices. Goedel’s Theorem and Alan Turing’s analysis of the Halting Problem prove that even mathematics is not simply a rule-following exercise. If it were, then mathematics could be formalized – meaning it could be reduced to the manipulation of symbols without having to worry about what those symbols mean. In fact, in such a scenario, “symbols” per se are eliminated and simply replaced with zeros and ones. Mathematical formalism would mean that truth is irrelevant, but Goedel’s Theorem relies on truth at crucial moments in its proof. The human mind is capable of “seeing” or perceiving truth, at times, such as with Goedelian propositions, and self-evident axioms such as P = P, in a way that cannot be reduced to rule-following and algorithms. Because of these issues, no machine can replace human mathematicians for solving the outstanding problems of mathematics. And the halting problem demonstrated that non-algorithmic (i.e., non-computer) methods are necessary for testing algorithms. The process cannot be automated and thus the human mind is capable of doing something other than following algorithms.

Sam Harris recently stated that mathematicians are likely to be replaced by machines in the near future. He apparently is unfamiliar with the work of Gödel or Alan Turing.

Nevertheless, ever since simple calculating machines were invented, such as the one created by Blaise Pascal, certain people, perhaps of low emotional intelligence and thus of poor phenomenological insight – insight into the nature of human subjective experience – have imagined that calculating machines “think.” This, despite the vigorous protestations of the inventors of these devices who insisted that the machines were not thinking; they were merely rendering it unnecessary for humans to have to bother with the boring mechanical aspects of thought – precisely those things that do not require insight or problem-solving. Machines use algorithms, step-by-step procedures to reach guaranteed results for well-defined questions, such as Google directions. Such directions can be followed mindlessly with no real understanding of why the directions are what they are or even knowing where the directions are taking you.

The beauty of the Chinese Room thought experiment is that it renders discernible the difference between what computers are doing and what the human mind does, at least some of the time, and it does it using a kind of picture or allegory that appeals to intuition and right-hemispheric understanding.

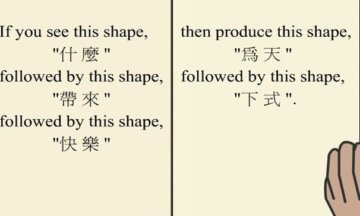

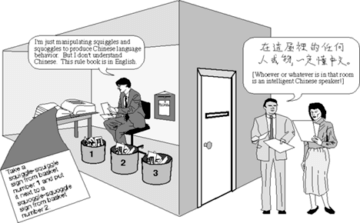

In the scenario, there is a speaker of Chinese outside a room. Inside the room is a man with a rule-book. Crucially, the man in the room does not understand Chinese. The rule-book, or manual, is a list of algorithms, or instructions of the following type.

There is a slot in the door of the room through which the speaker and reader of Chinese can pass his questions and through which the man in the room can pass his “answers.”

The rules in the manual that the man in the room is following are a series of “If…then…” statements. If you see this shape, write this shape. None of it means anything to the man with the rule-book.

The man in the room understands neither the input nor his own output. He understands neither the questions nor the answers. He is literally like a machine; a mere rule-following device. The man in the room represents what a computer is doing.

To the Chinese speaker and writer outside the room, the man/rule-book combo seems intelligent. To the Chinese speaker, he has asked a meaningful question which he himself understands, and the responses he gets from the man in the room, the Chinese speaker also understands. The Chinese speaker understands the questions and the answers and might imagine that the man in the room does too, but, of course, the Chinese speaker is mistaken.

The situation could, perhaps, be compared with someone who had memorized the rule-book and could answer in phonetic Chinese – meaning he has learned how to say words in Chinese but has no idea what he is saying.

Phenomenologically, we all know the difference between understanding a question and not understanding a question, whether because we could not hear the question properly or because we do not understand the question itself.

Searle chose the Chinese language for his thought experiment simply because most speakers of English have no knowledge of this non-Indo-European language which, on top of its unfamiliarity, relies on exact pronunciation to communicate meaning because it is a “tonal” language, something very hard to master for the rest of us. Whether a word is pronounced with a rising inflexion or not, for instance, can turn the word into another word entirely. Plus, English has no cognates at all in Chinese, meaning that it shares no words in common. This differs from the situation with Indo-European languages, many of which have words that are either the same or so similar that it is possible to guess their meaning – especially in context, e.g., “water,” and “Wasser” in German.

Thus, the English speaker in the room will truly have no idea at all, nor will he be able to guess, what Chinese words mean. There is the possibility that since Chinese is a pictographic language and Chinese characters are sometimes highly stylized pictures of things, like a woman sweeping with a broom, that it might occasionally be possible to guess as to the character’s meaning. However, in the thought experiment, we are imagining that this never occurs.

So, hopefully it is possible to see the difference between the man in the room with his rule-book and the speaker of Chinese.

It is possible to have the illusion that computers (the man in the room) are intelligent and understand things because, for instance, we ask questions of Google, and we get a list of possible replies which are often relevant. It can seem like Google/the computer understands the question and gives a meaningful reply. In fact, the reply can be meaningful, but it is only meaningful to us, not to the computer. The computer has encountered a series of zeros and ones and responded with equally meaningless zeroes and ones that light up pixels on a computer monitor in way that makes sense to literate people who know how to read. But it means nothing to the computer.

For this illusion to work, genuinely intelligent human beings have programmed the computer to give responses that are meaningful to other humans. Programming a computer means to supply it with a set of algorithms that do not require insight, common sense, or understanding to function.

It is sometimes possible to get an algorithm to write another algorithm, but it is not possible to test the validity of algorithms with another algorithm, at least not all the time. That is what the halting problem established. The validity of an algorithm, i.e., whether the results of an algorithm are accurate and true must be checked at some point in the process non-algorithmically. E.g., a method of doing long division is an algorithm. To check that this method is legitimate, the results must be verified. To see whether a computer model of the weather is accurate, it is necessary to look outside and actually compare it to reality. Is the weather really doing what the computer model said it is doing?

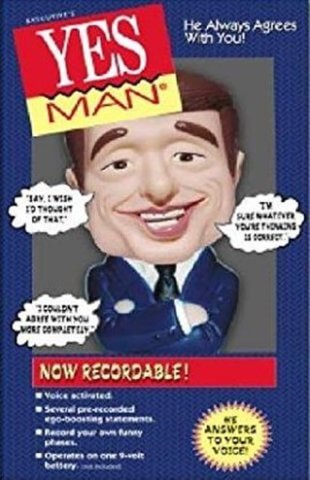

Sometimes I bring to class a plastic doll called the “Yes Man.” When turned on and tapped on the head, the Yes Man utters pre-recorded statements that all signify agreement. “When you’re right, you’re right.” “I couldn’t agree with you more completely.” “Say, I wish I’d thought of that.” “I’m sure whatever you’re thinking is correct.” The doll is manufactured as a parody of an ingratiating employee of a company hoping to get ahead by being agreeable and making his boss feel good. I bring it to class as a rebuttal of the computer theory of mind – the idea that human beings are mindless, algorithm-following automatons, i.e., machines. This is not what we are like is the intended implication.

Once I showed it to a fellow professor, explaining why I used it in class, and the person said “But that is what people are like; only more complicated.”

This person had once confessed to me that in a whole class of people practicing techniques used in Rogerian counseling where one “mirrors” the meaning and emotional component of what someone has just said to you, (“I’m upset that my boss doesn’t understand me” is met by “Not being understood can feel frustrating”) she had been the worst at figuring out what other people were feeling or what emotions they were expressing verbally. This lack seems likely to have contributed to her imagining that we human beings are just more complicated versions of the Yes Man.

The Yes Man is literally mechanical. He is not conscious. He understands nothing. His ability to speak English exists only because an actual English-speaker had his voice recorded, someone else stuck it on a chip and someone else again put it inside the doll. This woman was telling me that this is what it is like to be her. She, apparently, experiences herself to be just like the Yes Man but with a wider repertoire of pre-recorded responses. The implication is that she is not capable of thought, understanding or feeling. She experiences herself as a mindless automaton – a plastic toy from a joke shop.

This confirms the claim that people of low emotional intelligence are the last people who should be consulted about things to do with what it means to be a human being, how we should live our lives, or even what it is like to be a human being. If such people have too much influence in the workplace rampant misery should be expected. Someone who looks at other people as “more complicated” plastic mindless dolls is not someone for whom most people would wish to work.

The idea that human beings resemble plastic toys also has moral implications – namely, deleterious ones. The person who made this comment had the air of someone who might see fit to cut someone’s throat while discussing a lunch menu over her shoulder. Her idea of human beings is not one that is consistent with anyone having an ultimate intrinsic moral worth. This gave her the somewhat frightening demeanor of someone you could tell would stand on principle rather than let anyone stand in her way.

The Chinese Room thought experiment is a rather brilliant method of appealing to a person’s intuitive understanding of what is going on in understanding. The difference between a Chinese speaker who knows what he is saying – who understands a question and responds intelligently – could not be more different than if I, a non-Chinese speaker, were trained to say something in phonetic Chinese when I heard a sequence of sounds in Chinese, also learned merely phonetically as meaningless sounds.

Sometimes it is claimed that though the man in the room does not understand Chinese “the room” understands Chinese. The man and his rule-book “understands” Chinese though neither the man alone nor the rule-book evince understanding. This is to misunderstand understanding. This thought imagines that understanding is determined just functionally, but it is not. The concepts of meaning and understanding are linked.

If someone follows instructions for origami with his eyes closed, he might produce a little animal without realizing it. He will not understand what he is doing and thus the meaning of what he has just done. A thinking, seeing person who knows what the intended result is supposed to be will be necessary to verify that the figure of animal has in fact been achieved.

The reason The Chinese Room thought experiment is so brilliant and frequently cited is because it appeals to our intuitive understanding of understanding. Someone could give the correct answer to a math problem because they understood it, or because he has mechanically followed an algorithm designed by someone else. Many high school students in fact do this all the time. Often they spend entire semesters doing algebra without actually understanding what algebra is or what it is for.

Normally, it is possible to tell the difference between when we understand something and when we do not. We notice the difference phenomenologically. Someone starts speaking Chinese and I notice that I do not understand. It is not possible to define “understanding” but it is possible to experience the difference between understanding and a failure to understand. This difference does involve epistemic fallibility. It is entirely possible, on occasion, to imagine that you have understood something when you have not. But this situation is exceptional. Usually, we are correct when we determine if we have understood or not. If necessary, it is usually possible to ask questions to check if we are right about this or not. If we humans were always in the dark as to whether we have understood or not, we would be in a permanent state of epistemic bewilderment and our proneness to error and even death would skyrocket.

Left-hemisphere thinking loves clarity and definitions but clarity and definitions kill humor, metaphor, symbols, poetry and intuitional understanding of emotional meaning. Once we have understood something, the results can be subjected to a left-hemisphere analysis. For some people with low emotional intelligence, the phenomenology of understanding might remain opaque and many other things to do with intuitions too. Such people cannot contrast their subjective experience of understanding with what is going on in the Chinese Room and the thought experiment is likely to be a wash. An argument may not be able to appeal to intuitive perceptions if your intuitions are defective.

It should be possible to see that imagining that your computer understands you, rather than being a mechanical device that serves as an intermediary between you and the programmers of the computer, is to engage in a false anthropomorphism. The failure to appreciate this can be compared to the limitations of the color-blind in art appreciation, or the tone-deaf for music.

Generally speaking, we love the surprising and unexpected in psychology and philosophy. Obvious truths can be banal. A general rule of thumb in these areas is that the more surprising results are in these fields, the less likely they are to be true. This may be why there has been a crisis of replication in psychology with over 70% of published papers having results that are unreproducible.

When someone like Sigmund Freud claims that all dreams are instances of wish fulfillment – the claim is so stupid and contradicted by everyday experience that it rises above the noise of the mundane and attracts attention. Many of Sam Harris’ assertions also seem indicative of extensive brain damage, filled as they are with self-contradictions, giving them a “the circus has come to town” quality. The truth is frequently no match for falsehoods.