The Halting Problem

Kurt Gödel’s Incompleteness Theorem was inspired by David Hilbert’s question “Are the axioms of a formal system sufficient to derive every statement that is true in all models of the system?” Hilbert played the same role regarding Alan Turing’s proof of the halting problem. Hilbert had asked: “Is there some mechanical procedure [an algorithm] for answering all mathematical problems, belonging to some broad, but well-defined class?”[1] In German this is called Entscheidungsproblem – the decision problem.[2]

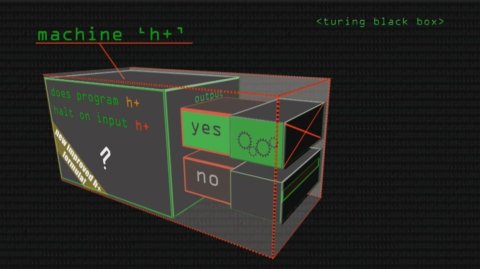

Turing found that he could answer this question by framing it in terms of a Turing machine[3] – could there be a program that could determine whether any other arbitrary computer program and input would eventually stop or just loop forever? This was called the halting problem:

“Alan Turing proved in 1936 that a general algorithm to solve the halting problem for all possible program-input pairs cannot exist.”[4]

The terms “algorithm,” “computer program,” “mechanical decision procedure, “mechanical procedure,” “effective procedure,” or just “procedure” according to one computer programmer,[5] are all synonyms.

Algorithms can be contrasted with heuristics.

A heuristic is a loosely defined rule of thumb often with a teleological (purposeful, goal-driven) element. E.g., “if you want to win at chess, try not to play just defensively.” Or “you should generally try to protect your queen, although you might be able to surprise your opponent if you sacrifice her and actually use her as the lure for a trap.”

An algorithm, though, is a set of instructions about what precisely to do in a given well-defined circumstance that is guaranteed to give a result.

An algorithm is a method for finding, say, what the largest number two other numbers are divisible by. It is a set of instructions; a procedure that can be written down as a series of steps. Step one – divide the larger number by the smaller number. Step two – replace the original two numbers by the remainder, etc.

The validity of an algorithm such as this one is not determined algorithmically but by an external test. Algorithms do not decide mathematical truth.[6] It will be necessary to use some other method to test whether a given algorithm is producing the correct result.

Quite often mathematics is (badly) taught as a series of such techniques, algorithms, mechanical decision procedures, while the students have no idea what they are really doing. Sometimes they are taught algorithms for a whole area of mathematics like algebra, while never even knowing what algebra even is, what it is for or why they are doing it, which is surely a spectacular waste of time.[7]

David Hilbert’s question is effectively – is there an all-purpose algorithm for finding other algorithms? Technically, an algorithm with no end, that never stops, is not really an algorithm. A method that fails is not a method at all. Is there a set procedure for discovering all and only set procedures?

If there were; if the halting problem were solvable, then computer programmers, who are just writing algorithms, could write an algorithm that could solve all the intractable and unsolvable outstanding problems in mathematics. Or, determine once and for all, which ones were solvable and which ones were not, putting mathematicians out of a job.

An example given by Roger Penrose is the Goldbach conjecture[8] which is that every even number greater than 2 can be regarded as the sum of two prime numbers. The even number is split into pairs and “for each such even number, it splits into some pair for which both members are prime.” There is an algorithm, some finite sequence of steps, for deciding whether a number is prime or not. That is not a problem. So, an algorithm is written that stops when neither of the two numbers are prime. So, if there were some way to determine whether this algorithm (program, Turing machine) ever stops, then the problem of proving or disproving the Goldbach conjecture will have been solved.

The reader can probably intuit that this is simply not going to be possible. Has the algorithm not stopped because the two numbers are always prime or because the program has just not found the exception yet? There is no way to tell the difference in all cases between an answer that is not there and an answer that just has not been found yet. This question is not “decidable” (provably true or false) as far as is known. If there turns out to be a proof for the Goldbach conjecture, an algorithm, it is not one that is going to be found by using another algorithm.

Here it is possible to see how the Halting Problem is related to Gödel’s Incompleteness Theorem. The Goldbach conjecture might be true, but so far at least, it is unprovable. There is no definite set of steps to determine one way or the other.

There is an irreducible role for insight and intuition when it comes even to the areas of human thought that are typically considered the most certain and precise. Albert Einstein wrote:

“The supreme task of the physicist is the discovery of the most general elementary laws from which the world-picture can be deduced logically. But there is no logical way to the discovery of these elemental laws. There is only the way of intuition, which is helped by a feeling for the order lying behind the appearance, and this Einfühlung [literally, empathy or ‘feeling one’s way in’] is developed by experience.’”[9]

Discovery, scientific or otherwise, involves taking something obscure, unknown, dark, mysterious and rendering it relatively intelligible, known, clear, light-of-day.

The Halting Problem and Gödel’s Incompleteness Theorem offer definitive, logical, left hemisphere[10] proofs about the limit of definitive, logical, left hemisphere proofs. Anybody with a normally functioning right hemisphere should understand this intuitively – lived experience has already demonstrated this truth. An algorithm for a successful romantic relationship does not exist and if it did exist, it would kill any interest or life in the relationship, so it is not even good as a fantasy.

These rebuttals to David Hilbert’s desire for an all-knowing mechanical procedure offer salve for all those who have had to deal with autistic, Asperger Syndrome sufferers and their attempts to get rid of the ambiguous; their dislike of words with multiple meanings, ambiguity, metaphor, poetry, the connotative, and nuance; their preference for machines versus people and thus their interest in turning people into machines, even if just metaphorically.

Generally, it is too much to ask that those misguided enough to want mechanical procedures for everything and absolute certainty recognize the error of their ways and start reading novels and taking a greater interest in the role of emotion in life. Nonetheless, the rest of us can take some moral satisfaction that logicians have had to recognize the severe limits of mere logic. It is not rational to be too rationalistic.

Paradoxically, it is possible to be very certain that certainty in all things is not achievable. Outright contradiction is avoided because it is never claimed that nothing can be proven to be true: just not all things can be so proven.

Gödel’s Theorem postulates that an axiomatic system of sufficient complexity can be either consistent and incomplete, or inconsistent and complete, but not consistent and complete.

Completeness

In a complete system, everything true is provable. Unfortunately, completeness and consistency is impossible so not everything true is provable. Any axiomatic system at the level of complexity of multiplication and beyond must contain statements not provable within that system. A + P. “A” being the axiomatic system and “P” being the statements derived from the axiomatic system but that are true yet not decidable within it. Not all truth is susceptible of proof.

Consistency

In a consistent system, everything that is provable is true.

If inconsistency is permitted in a system, then the system can be “complete” but inconsistency will mean “proving” things that are not true.

It is better to prove too little than too much.

Inconsistency would mean, for instance, ordering rocket engines to simultaneously shut down and ignite; a surgeon who would both try to kill a patient and to cure him – a disastrous and nonsensical state of affairs.

Decidability

Completeness involves decidability. A problem is decidable if a “true” or “false” as an answer can be given rather than “don’t know,” crashing or looping forever in an interminable state of indecision.

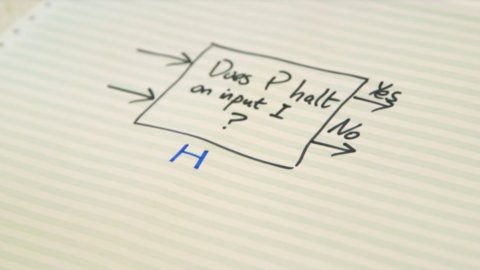

The halting problem proves that there can be no halting machine, no algorithm, that looks at any given program and input and can determine whether in all cases the program will ever halt or whether it will just loop forever on that input.

The fact that an algorithm has not found the answer to the question yet does not mean it never will. Should the operator keep waiting or give up? Nobody knows. No halting machine can exist to tell the operator one way or the other.

Such a “decider” algorithm would be incredibly useful, but unfortunately, it is impossible.

The halting problem proves that there is more to cognition than computation. If computation were sufficient for all thought, the halting machine could do its hypothetical job. There is no set procedure, no algorithm, no computer program, for finding and identifying all set procedures, algorithms and computer programs. The rational alone is sterile. Insight and intuition must be brought in to provide the shortfall.

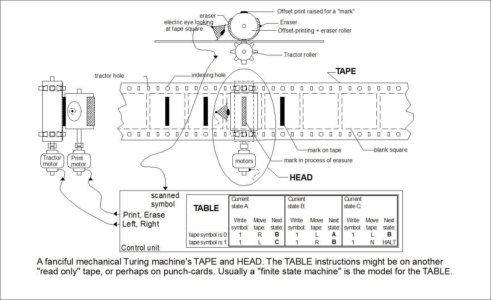

Universal Turing Machine

Alan Turing invented the idea of a modern computer; a Universal Turing Machine in 1936. It consisted of an infinite loop of paper with instructions on it and a read/write/erase head. The instructions are divided into squares telling the machine what to do next.

The trouble is that some programs never stop running – they do not halt. Everyone is familiar with the little green circle and the like that tells the computer operator that a program is loading or a page is being saved, etc. Sometimes the little circle halts and sometimes it does not in which case the operator just has to exit the program. The operator never knows if he should just wait a little longer or if waiting is pointless.

Before the first modern computer was ever built, Alan Turing proved that there can never ever be an effective procedure for deciding whether in all cases p*I, a program and input, will halt. This is true no matter how fast or powerful a computer is or what programs it runs. There can never be a “decider” program, a halting machine, that tells the operator whether another program will halt or not. So, so long as people and computers of any kind or construction exist, they will be looking at the equivalent of little green circles and wondering if the program will halt or not and having no way to know.

The halting problem is about far more than an annoying feature of computers.

The implication of the halting problem is that no one will ever have enough information to make a decision that is certain.

This includes providing an infinite amount of information.

It is always possible that there is some missing information that shows the wrong decision has been made. To determine whether this might be the case, a search is made to see if there is some missing information, e.g., are all swans white? The search will continue until relevant missing information is found. Is there some way of deciding if a search program will halt or not?

If a search is made for something that does not exist, then there is no way of knowing when to stop searching.

If the information does not exist, the program will run forever. If it does exist, and assuming the program is well-written and fit for purpose, the program will halt. But to know if the program will halt, it would be necessary to know that there is indeed missing information which is the very thing that is trying to be determined.

Decidability would mean that when making a decision in all instances it would be possible to know in advance if relevant information exists that has just not been found yet. If this were possible, then decisions that were certain in all cases could be made, but it is not.

We cannot be sure for any arbitrary well-defined question and procedure to answer it whether that procedure will ever give us an answer.

This “shows how a fully deterministic system can be in principle unpredictable!”[11]

Presumably, this would apply to Laplace’s Demon. Laplace’s Demon is imagined to know the position of all the particles in the universe and to know the real and full laws of physics. It is imagined that the Demon would be able to predict everything that was going to happen or to explain everything that had happened. Since the halting problem is not solved even by an infinite amount of information, it will apply to the Demon too. If the Demon starts to try to solve a problem he will not know in advance, in all cases, if it is solvable nor will he know when to stop looking. As will be seen below, imagining that the halting problem can be solved leads to a contradiction.

If Turing Machines exist in the universe of Laplace’s Demon, then, since the halting “decider” program does not exist, Laplace’s Demon will not know which problems are solvable either. The limitations on computation mean that unpredictability remains.

A related problem might be answering the question: “Are the security measures taken to protect an operating system sufficient to prevent hacking?” Imagine constructing a program to determine that! Not finding an answer does not mean there is no answer. Laplace’s Demon might start developing a migraine.

The Impossible Machine Version of the Halting Problem

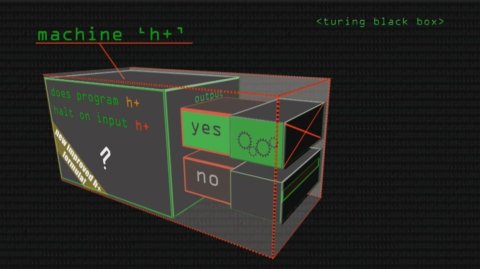

- Impossible Machine Version. Assumption for reductio: assume that there is a program for our UTM (Universal Turing Machine) that can determine for any arbitrary program+data combination whether it will halt.

- Call this program H for Halt-Check. H takes as its input a string p*d which is just some program followed by its data. We can write U(H*p*d) to say the input to U is H and the concatenation[12] of p and d with the conventional marker for their separation.

- Now, from this machine it is trivial to create the following machine C (for Crazy-Machine): C consists of this machine U and H plus just a little bit of extra code: if H says that p*d will halt, loop forever; if H says that p*d will never halt, then halt.

- Make just one more modification to create the machine R (for Really Crazy): R takes some input I and just concatenates it (by whatever method we are using to put program and data together) and feeds it to C. So, if you put input I into R it feeds (I*I) to C and we have C(I*I).

- Now, feed R to R. What happens? If R(R) halts, then R(R) loops forever; but then R(R) loops, which means that R(R) halts.

The machine R is impossible. Given H, the creation of C and of R was trivial, so something else must have gone wrong: namely, it must be the assumption that there is a program H.

- This result is shocking and very powerful. It means that we cannot be sure for any arbitrary well-defined question and procedure to answer it whether that procedure will ever give us an answer. There is no way now to realize Hilbert’s dream: we have agreed on what an — or, at least, what one kind of — effective procedure is, but we cannot be sure it will ever yield an answer in any arbitrary case. Since there is no effective way to determine what the effective procedures are (the good computer programs), it appears reason cannot discover the limits of reason. (A philosophical consequence of interest is also that this is a nicely clear example of how a fully deterministic system can be in principle unpredictable!)”

What this argument is showing is that assuming that a halting machine could exist leads to a contradiction. Assuming the opposite of what the thinker is trying to prove and deriving a contradiction from it is called an “indirect proof.”

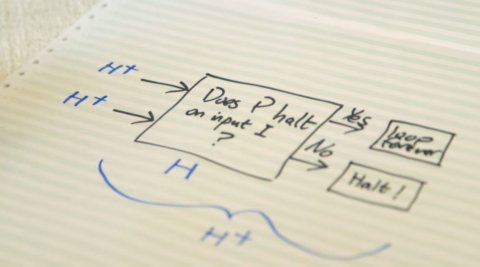

In this case one begins with the imaginary existence of a perfectly functioning halting machine; a “decider” program, program H, that can determine whether any given p*d[13] will halt or whether it will loop forever.

Another way of picturing it is this:

The halting machine, H, is an algorithm; the decider;

that takes in a program

and an input for the program

Can it tell the operator whether the program halts or loops forever when run on the input?

The next step in Professor Delancey’s explanation is that if “H” is possible, then H+ is also possible. In fact, it is obvious and straight-forward (trivial) to create.

H+ is a crazy machine that can be created from H. H+ says that when H says “Yes, the program with that input will halt,” then H+ will loop forever (i.e., not halt). When H says “No, the p*d will run forever,” then H+ will halt.

The question that might arise for some people at this point is “Why would anyone in his right mind create H+?” It seems like a lovely and useful decider program, a halting machine, is being taken and messed up. What’s the point? Leave H alone!

It is useful to remember that the halting machine cannot exist and we are trying to prove that it cannot exist. So we are not “ruining” an otherwise great machine. We are half wrecking a machine that is impossible and are trying to prove definitively, once and for all, that it could never have existed in the first place.

Gödel’s Incompleteness Theorem contains an axiom “this statement is not provable within this axiomatic system.” If this is proved, then it is proved to be unprovable, since that is what the statement says. If it cannot be proved, then once again, it is unprovable. Hence, not all statements in a reasonably complex axiomatic system are provable.

In the case of the halting problem, it is being asked whether an algorithm can exist that could tell in all cases whether an answer can be found to all well-defined questions. We are then showing that answering “yes” to that question leads to a contradiction. We are going to feed the modified halting machine a question that it cannot answer without generating contradictory and impossible results, proving that the imagined halting machine cannot determine in all cases whether programs with given inputs will halt or not.

It should be remembered that Gödel’s Theorem does not apply at the level of simple addition. No unprovable statements are generated by 1 + 1 = 2, but they are for 1 x 1 = 1.

Likewise, the halting problem does not apply to incredibly simple programs that say, for instance, “print ‘hello.’” This will stop for sure. Or “while (true) continue” will not halt. But in more complex cases the answer can be unknowable.

It is sometimes also possible to show with a specific algorithm whether it will halt or not. “But each [such] proof has to be developed specifically for the algorithm at hand; there is no mechanical, general way to determine whether algorithms on a Turing machine halt.”[14]

Returning to the issue of why anyone would make the crazy program H+, we are just using logic and logically, if H is possible, then H+ is trivial to create, i.e., H+ is completely logically consistent with H and can be easily derived from H. If the halting machine, the decider program could exist, then H+ definitely can exist also.

The next step is to create the really crazy machine, H++. H++ takes any input, concatenates it, I*I, and feeds it to H+.

The trick is that the impossible machine (really, it’s an impossible program) must also consider itself considering itself as input. Suppose you have the halt-check program H, then you make the crazy program H+. But there’s another step. You make a program H++ that takes any input and concatenates it and feeds that to H+. So, if you feed any program P to H++, then H++ feeds PP to H+.

Now: feed H++ to H++. So H++ is asking: “Will I halt or will I run forever, when considering my own program?” It will not halt if looping, and it will loop if halting; a contradiction. Making H+ and H++ are trivial if you have H, so the source of that contradiction must be the supposition that there could be an H.[15]

What does it mean to feed H++ to H++?

Again, H++ takes any input, concatenates it, I*I, and feeds it to H+.

This time the input is itself; H++.

It is important to know that when an input is concatenated, it is not just added or doubled. I*I means a program is running itself as input.

Windows runs Word as an input. The operating system program runs Microsoft Word which is also a program. These days we call programs like Word an “app,” as in “application” to distinguish it from an operating system; an OS, like Windows or Apple iOS. But they are all just programs running other programs as input.

The really crazy machine is taking the really crazy machine as input and running it. But what is the really crazy machine doing in this case?

When H++ is given itself, H++, as input, H++ creates H++*H++ and submits H++*H++ to H+.

After all, all H++ does is adds an input to itself and submits the result to H+. I*I = the input running the input. The input H++ is functioning as the operating system and the app in this case.

It is being asked if H++ runs H++ and is submitted to H+, will H+ say it will halt or loop?

Again, will the program H++ that concatenates inputs, I*I, halt or run forever when running itself as input? The program that concatenates is being taken and being made to concatenate itself. Will that process ever finish?

Now using a program as its own input is pretty crazy, but H++, the really crazy machine is obvious and straight-forward to create from H+. It is logically consistent with and derived from H+.[16]

If H is possible, then H+ is possible and H++ is possible. And if H++ takes itself as input, then this involves feeding the result to H+. The result is that if H+ says it will halt, then it will run forever. If H+ says it will loop forever, then it will halt. This is a contradiction.

Since everything that has been said follows from assuming H exists; that a halting machine exists; and we have derived a contradiction from this assumption, then this assumption must be erroneous. No such machine; no “decider” program exists or could ever exist.

“There can be no effective procedures for finding all and only effective procedures.”[17] That is what the “decider” program, the halting machine, would be trying to do vis-à-vis other programs and inputs.

Infinite Regress and The Halting Problem

Imagine the decider program, the imagined halting machine, has been given another program and an input to determine whether the program halts given that input.[18] The decider program is run for a second. It does not halt. It is run for a minute. It does not halt. The program runs for an hour. It does not halt. Is this because it is never going to halt, or because it has not being given long enough? Instead of solving the problem, it has just been reiterated.

The decider program becomes just another instance of the halting problem. Will the halting machine halt or not?

Perhaps another halting machine could be introduced to decide if the first halting machine was going to halt or to loop forever. But it would still not be possible to know if this new halting machine was going to halt or loop forever, so it would be necessary to submit this mess to yet another halting machine, etc.

Concluding Implications

Gödel’s Incompleteness Theorem and the Halting Problem imply that axioms must be treated as hypotheses to be discarded if and when they generate contradictions. This means that mathematics takes on the nature of science, using hypotheses that are unprovable and might turn out to be false at some later date. This gives math and science a tentative, provisional quality – eliminating certainty from either of them.

To put it in Platonic terms, human knowledge is approximate at best. The Form of Truth cannot simply be thrust into the human brain. If it could, human omniscience would be possible.

The requirement that the results of thinking must be certain would make thinking impossible. Speaking to the London Mathematical Society in 1947 Alan Turing said “…if a machine is expected to be infallible, it cannot also be intelligent.[19] There are several mathematical theorems which say almost exactly that. But these theorems say nothing about how much intelligence may be displayed if a machine makes no pretence at infallibility.”[20]

Turing seems to have thought that intelligence requires a great deal more than computing:

“Intelligent behaviour presumably consists in a departure from the completely disciplined behaviour involved in computation, but a rather slight one, which does not give rise to random behaviour, or to pointless repetitive loops.”[21]

Peter Kugel writes:

Turing (1948, p. 21)[22] saw it more than fifty years ago when he suggested that, in order to be intelligent, computers would have to be given what he called “initiative.” Turing (1948, pp. 21-23) discussed initiative, but he did not really define it other than to say that it was not “discipline” – the ability to follow orders given by others. Then he gave an example (Turing, 1948, p. 22):

A very typical sort of problem requiring some sort of initiative consists of those of the form ‘Find a number n such that . . .’ This form covers a very great variety of problems. For instance problems of the form ‘See if you can find a way of calculating the function which will enable us to find the values for arguments…’ are reducible to this form.

Finding such a number is, he wrote, “clearly equivalent to finding a program.”

It is not surprising that intelligence in computers might require them to be given some initiative. You have to let people exercise initiative if you want them to behave intelligently. You cannot expect intelligence from people if you insist that they do exactly what you tell them to do in exactly the way you tell them.

Likewise, Max Planck writes:

“. . . empiricism is unassailable on the fundamental ground of pure logic; and its conclusions are equally impregnable. But if we look at it purely from the viewpoint of knowledge it leads into a blind alley, which is called solipsism. In order to escape from this impasse there is no other way open but to jump the wall at some part of it, and preferably at the beginning. This can be done only by introducing, once and for all, a metaphysical hypothesis which has nothing to do with the immediate experience of sense-perceptions or the conclusions logically drawn from them.”[23]

Pascal writes:

“It is rare that the rationalists achieve subtlety and that subtle minds are rationalistic, because the rationalists want to treat matters of intuition rationalistically, and make fools of themselves, wanting to start with definitions and then move on to principles, which is not the way to deal with this kind of reasoning. Not that the mind does not do so, but it does it implicitly, naturally, and without artifice; for it is beyond man’s wit to say how, and even to intuit it belongs only to a few.”[24]

All of these points are related to Iain McGilchrist’s comments about the functioning of the left and right hemispheres of the human brain in The Master and His Emissary.

If intuition and insight are often necessary to find algorithms and to derive testable hypotheses, what exactly are intuition and insight? Well, they clearly involve intelligence and they are not merely random. They plainly work, at least some of the time, since math and logic work and they ultimately rest on intuition and insight. They are not the result of algorithms in the brain because this supposition simply reiterates the assumption that the halting problem disproves.

One model of consciousness can be found in the allegory of Plato’s Cave. The levels outlined arguably correlate with body, mind, soul and spirit. There consciousness extends downward into the shadows and the physical realm, and upward towards God. Conscious awareness at any given moment is a search light centered on some part of this picture. The rest is unconscious. Insight and understanding seem to be a more broadly focused right hemisphere affair. They often come after sleep or when someone is in a relaxed meditative state, perhaps out for a walk – in other words, indirectly. They will often be preceded by a conscious effort to understand but then arrive after the foot comes off the accelerator pedal. As a very minor example, having tried to remember a person’s name, another word, or a phrase the information will sometimes burst unbidden into consciousness three days later while the person is thinking about something else entirely. Perhaps such things are a matter of tapping into universal consciousness – most of which is subconscious to any given individual at a particular moment in time.

Notes

[1] Penrose, Roger. The Emperor’s New Mind, p. 57.

[2] Turing got his proof after studying Gödel and Gödel was well aware of Bertrand Russell’s paradox (p. 111) “R is the set of all sets that do not have themselves as members.” If R is a not a member of itself, then it is a member of itself. If R is a member of itself, then it cannot be a member of itself. (p. 101) The key part of Gödel’s Theorem, the axiom that cannot be proved within the system, turned this kind of paradoxical reasoning into part of a valid mathematical argument. Penrose, p. 111.

[3] An abstract description of a computer, a Universal Turing Machine, described below.

[4] https://en.wikipedia.org/wiki/Halting_problem

[5] Max Sokolovsky.

[6] Penrose, p. 64.

[7] Driving directions to the nearest large city is an algorithm. Whether you have actually arrived at the nearest large city is not answered by the driving directions themselves.

[8] Penrose, p. 59.

[9] Planck, M., Where is Science Going? (with a preface by Albert Einstein), trans. J Murphy, Allen & Unwin, London, 1933 p. 12.

[10] See the next article based on The Master and His Emissary by Iain McGilchrist. The left hemisphere of the brain deals with the familiar and the known. The right hemisphere with the anomalous, ambiguous, uncertain and often what is the unprovable. It is associated with problem-solving – mathematical and otherwise.

[11] Craig Delancey. http://www.oswego.edu/~delancey/309_DIR/LLT_LECTURES/11_turing_out.html

[12] “Concatenation” just means stringing things together in a linear sequence. In the case of computers, it means one program running another program.

[13] “Program + data,” or “program + input.”

[14] https://en.wikipedia.org/wiki/Halting_problem

[15] Personal communication from Prof. Craig Delancey.

[16] “Not every program would be impossible if fed to H+. Many would just loop or just stop.” From Prof. Delancey. And just looping or stopping is not a contradiction.

[17] Craig Delancey – private communication.

[18] https://www.youtube.com/watch?v=wGLQiHXHWNk

[19] The following quotations and comments about Turing are from Peter Kugel’s Computing Machines Can’t Be Intelligent (. . . And Turing Said So) http://hilltop.bradley.edu/~chris/ComputersCannotBeIntelligent.pdf

[20] Turing, A.M. (1947), Lecture to The London Mathematical Society On 20 February 1947, in Carpenter and Doran (1986), p. 124.

[21] Turing, A.M. (1950), Computing Machinery And Intelligence, Mind 59 (N.S. 236), 433-460, p. 459

[22] Turing, A.M. (1948), Machine Intelligence, in Meltzer and Mitchie (1969).

[23] Planck, M., Where is Science Going? (with a preface by Albert Einstein), trans. J Murphy, Allen & Unwin, London, 1933, p. 128.

[24] Pascal, Pensées, §1 (Lafuma §512).