Whose Artifice? Which Intelligence?

Artificial Intelligence has come a long way since IBM’s DeepBlue defeated chess master Gary Kasparov (in suspicious circumstances, on its second attempt) in 1997. Our most advanced AIs have gone from dubiously defeating humans at chess to convincingly defeating humans at a more exotic board game. I’ll delegate the job of explaining the game to Henry Kissinger: “In [Go], each player deploys 180 or 181 pieces (depending on which color he or she chooses), placed alternately on an initially empty board; victory goes to the side that, by making better strategic decisions, immobilizes his or her opponent by more effectively controlling territory.”

Kissinger says he was “amazed that a computer could master Go, which is more complex than chess.” And one ought not to be glib; the achievements of AlphaGo (which operated by a combination of machine learning and tree search techniques, and defeated Go master Lee Sedol in 2016) and its successor AlphaGo Zero (which, in contrast to AlphaGo, was trained entirely through “self-play” – it was supplied with the rules of Go and, in Kissinger’s words, “played innumerable games against itself, learning from its mistakes and refining its algorithms accordingly”) are genuinely impressive. A subsequent iteration, known simply as AlphaZero, also mastered chess – in four hours. To many observers these feats are so impressive they’re frightening. Kissinger is just the latest influential figure to join the chorus of disquiet. Billionaire space pioneer and Rick and Morty fan Elon Musk, a thought-leader in the tech world, speculated in a recent documentary that AI will treat humans with as much deference as humans treat ants.

“If AI has a goal and humanity just happens to be in the way, it will destroy humanity as a matter of course without even thinking about it. No hard feelings . . . It’s just like, if we’re building a road and an anthill just happens to be in the way, we don’t hate ants, we’re just building a road, and so, goodbye anthill.”

But there’s a crucial difference to be observed between Musk’s warning and Kissinger’s. Kissinger brusquely dismisses “scenarios of AI turning on its creators.” This is precisely what Musk is afraid of. Musk made mention of “superintelligence” in his jeremiad. His prediction wouldn’t make any sense without the notion that it’s possible to become superhumanly intelligent. But what if the idea of superintelligence is fundamentally misconceived? The notion of AlphaZero as a precursor to superintelligent AI (a notion that Kissinger at no point explicitly credits) presents a good opportunity for answering that question.

A naive reaction to AlphaZero (for the sake of convenience I’m conflating AlphaGo Zero and AlphaZero under this name) might see it as just another computer that’s good at playing games. This, AI enthusiasts will tell you, is spectacularly missing the point. But what is the point that’s being missed here? Is it the innovative leap from AlphaGo to AlphaZero, the fact that the latter has taught itself to play Go, and has swiftly surpassed all rivals, even invented its own strategies? Because you could still justly call that a computer that is good at playing games. It would be an understatement, to be sure; it’s actually a computer that’s superhumanly skilled at playing games. But what reason do we have for thinking that its superhuman skill in this particular narrow domain is going to translate to independent decision-making power in the real world, where the rules aren’t clearly stated (or even clearly stateable) and the variables of experience are not strictly limited as they are in a game? And what’s so special about being superhuman anyway?

To my naïve observer (the one who sees a game-playing thing where others see an Intelligence), there seems to be a qualitative difference between a machine executing a particular thought process and a human being exercising a particular judgement, even if both involve what looks like learning. To notice this difference is to notice that intelligence is a diverse concept, too diverse a concept for us to say with any confidence that a high level (even an unprecedentedly high level) of intelligence in game-playing is indicative of any capacity for intelligence in most of the activities that humans undertake. The AI enthusiast seems implicitly to be appealing to some bogus notion of general intelligence. In making this criticism I follow WIRED’s Kevin Kelly, who has argued that, “Intelligence is not a single dimension, so ‘smarter than humans’ is a meaningless concept” and that “Humans do not have general purpose minds, and neither will AIs.”

“Most technical people”, writes Kelly, “tend to graph intelligence . . . as a literal, single-dimension, linear graph of increasing amplitude. At one end is the low intelligence of, say, a small animal; at the other end is the high intelligence, of, say, a genius…This model is topologically equivalent to a ladder.” The prevailing view of AI holds that “AIs will inevitably overstep us onto higher rungs” of the ladder of intelligence. “[W]hat is important”, Kelly emphasizes, “is the ranking—the metric of increasing intelligence.” Kelly then goes on to point out why this model is “mythical” and “thoroughly unscientific.” Central to his argument is the fact that “we have no measurement, no single metric for intelligence. Instead we have many different metrics for many different types of cognition.” As Kelly says:

“suites of cognition vary between individuals and between species. A squirrel can remember the exact location of several thousand acorns for years, a feat that blows human minds away. So in that one type of cognition, squirrels exceed humans. That superpower is bundled with some other modes that are dim compared to ours in order to produce a squirrel mind. There are many other specific feats of cognition in the animal kingdom that are superior to humans, again bundled into different systems. Likewise in AI . . . “

The astonishment with which Go players have greeted AlphaZero’s achievement might be compared, then, to the astonishment that can be experienced when observing a crow solving in a matter of seconds a puzzle that would keep most humans vexed for minutes. AlphaZero’s only uniquely impressive feature, apart from the fact that it was created by humans (which is actually an impressive fact about those humans), is the speed at which it acquired its particular skill of superhuman cognition. It’s an astonishingly quick learner at one specific, very complicated task. It doesn’t follow that AlphaZero (or its successor) will be a quick learner at other specific, very complicated tasks. Not unless those tasks are structurally similar to playing Go, which accounts for an extremely narrow range of the tasks that humans have to perform.

Even more damaging to the prognostications of futurists like Musk is the recognition that “machine learning” is really a euphemism. The process described by that phrase is a way of creating knowledge, but beyond that it doesn’t resemble what we normally mean by “learning”. Human learning requires commitment, requires emotional investment and a good-faith acceptance of the value of being taught, an implicit affirmation of the teacher’s superiority, and authority, over the student. A machine is incapable of that, and for the same reason is incapable of playing a game in the way that any human plays a game. Kissinger observes the crucial distinction that has been lost on most people since the days of DeepBlue vs. Kasparov:

“Before AI began to play Go, the game had varied, layered purposes: A player sought not only to win, but also to learn new strategies potentially applicable to other of life’s dimensions. For its part, by contrast, AI knows only one purpose: to win. It “learns” not conceptually but mathematically, by marginal adjustments to its algorithms. So in learning to win Go by playing it differently than humans do, AI has changed both the game’s nature and its impact.”

When DeepBlue and Gary Kasparov played chess, Gary Kasparov was the only one playing chess. And when Lee Sedol faced off against AlphaGo, Sedol was the only one playing Go, the only one bringing to bear the kind of conceptual learning that Kissinger is talking about here. For this reason, any attempts to circumvent the dangers of AI by programming AIs with moral principles are doomed to failure. The same error that makes us think of AI as playing games just as humans play games also makes us think that the aptitude displayed by AIs (their “learning” and “intelligence”) makes them theoretically capable of moral instruction.

Go is, undeniably, extremely complicated. But it’s not complicated in the same way that any human life is. One could certainly overstate the incompatibility of gaming and the sophisticated formation of moral judgement. Playing a complex game like Go is bound to have an affective element. But the emotions that attend game-playing, the hopes and fears and satisfactions and frustrations that are natural concomitants to playing Go (or chess, or any good game), are not in themselves enough of a foundation on which to build the kind of sophisticated affect-informed judgements that are called for in the living of a human life. An adversarial encounter (which is what any game is) might supply a surprisingly rich stock of affective experiences, but it’s not going to allow you to understand complicated moral concepts like, say, presumption, discretion, ingratitude, generosity, pettiness, magnanimity. A person whose moral life consisted entirely of adversarial encounters would not be morally neutered (would not be incapable of moral experience) but would be morally perverted (the range of moral experiences available to them would be disablingly truncated). And to build moral subjectivity out of adversarial encounters would require you to derive a sound faculty from a faulty one, or create an advance from a limit. The limit in question is the difference between one mode of thought and another, despite their resemblance. Nobody expects an arborist to be good at carpentry. So there is no reason to believe that a computer might be capable of moral subjectivity.

It follows from this that much of the solemn headshaking and anxious handwringing that occupies discussions of AI is merely sentimental. It’s not that there’s nothing to fear from AI, it’s that our fears miss their proper object. All too often they’re fears based on the notion that an artificial superintelligent being will have no compelling reason not to coerce or harm, dispossess or kill a human being who resists its designs, and will face no effective impediments against doing so. But if it makes sense to be afraid of AI then it’s only (as if this weren’t enough!) as another vector of human evil and human error.

The idea that we might create an intelligence that will decide to enslave or exterminate us is mere science-fiction. Yet there’s good reason to be wary of complacency when it comes to AI. To understand the potential impact of this new technology, you might think of a very old one, the stirrup, an invention that changed the face of the planet. (I owe this example to Neil Postman, who mentions it in Technopoly – though note that the example is somewhat controversial). Before the stirrup, large-scale mounted combat was inconceivable. The stirrup changed the possibilities of warfare, so it had an immeasurable impact on human history. It meant that, for those who possessed this technology, populations could be more swiftly conquered and ill-equipped enemies more effectively trounced. Whoever invented the stirrup can’t have foreseen its implications, and may well have considered it simply a safety feature for horse riding. The difference between AI and the stirrup is that we know AI will have unforeseeable implications, and we’re very worried about that. It’s a known unknown. Still, AI is basically like the stirrup in that it’s another technological innovation, and it will have a particular narrow application; much broader than keeping you on your horse, of course, but also much narrower than enacting the level of self-and-other-consciousness that denotes moral agency. The trouble lies in the fact that applying it will make new choices available to us, will make us more powerful and therefore a greater danger to ourselves.

We can make sense of the threat posed by AI, then, by appealing to what in military circles is called RMA, Revolution in Military Affairs. The stirrup, once its military application was realised, inaugurated an RMA. So did the invention of gunpowder. Advances in AI can be expected to usher in a new RMA. And like all previous RMAs, it will entail the discovery of more efficient means of killing people. (We haven’t strayed far from our starting point; Go was reportedly a favourite pastime of Chairman Mao, and has long been considered a valuable military simulator).

The analogy of the stirrup is not meant to deflate the notion that AI might unleash carnage of an unprecedented kind, or even on an unprecedented scale, it’s rather meant to deflate the notion that AI has no precedent in being a technology whose full ramifications lie beyond the horizon of our understanding. One shouldn’t lightly compare anything to atomic weaponry, but think of the invention of the stirrup as a slow-motion detonation, sending out a centuries-long shockwave. The suffering of people caught in that shockwave was as real as that of the victims of Hiroshima and Nagasaki, if never as immediate and seldom as intense. Note also that the victims of Hiroshima and Nagasaki were not killed by innovative physics. They were killed by human action. Collateral’s Vincent is wrong to say that the bullet and the fall killed the fat Angelino. He killed the fat Angelino.[i] We fear the bomb and we fear guns (as a very intelligent person might once have feared the newly-invented stirrup) with good reason. We fear what humans might do with them. We fear that they might be the media through which the worst human impulses are transmitted into action.

It’s tempting to say that if the hysteria over AI has any rational basis, it’s this: AI as a potential RMA. But it makes sense to speak of AI not only as a way of classifying those technologies that could most plausibly lead to a new RMA, but also as a way of classifying those emerging technologies which most conspicuously realign our self-understanding toward that of computational beings. This is the other face of the AI threat.

One might plausibly say that “Artificial Intelligence” is the name we give to certain technologies that attenuate moral responsibility and disseminate rational calculation. This can be seen even in the search engine, which imparts an appetite for instantaneous and effortless access to information and makes us accustomed to outsourcing our thinking to an algorithm. But an algorithm does not think, so what we’ve really done is abandoned thinking altogether in favour of computation. We start to become incapable even of noticing the difference between thinking and computation. The danger here is not that we might create a superintelligent machine but that we might cease to value our own humanity to the extent that we turn ourselves into machines, or worse, into components within a machine, an input of data in a mindless computation.[ii]

The proper object of our fear, then, is not annihilation but transformation. In fairness to science-fiction (which I earlier rather snobbishly saddled with the adjective “mere”), it has concerned itself as much with the horrors of transformation as with the terror of annihilation. The pedigree of the genre is precisely a literary reckoning with those horrors. Elon Musk at least unconsciously acknowledges this ancient literary theme when he refers ominously to AI research as “summoning the devil”. The Faustian connotations of AI are readily apparent. Frankenstein is the necessary modern update of the Faust legend, an update not only in setting and premise (substituting scientific experiment for satanic pact) but also in its innovative transfiguration of Faust’s central theme.[iii] Faust’s sin, like Prometheus’s, is hubris, but Victor Frankenstein’s sin is something more. This is very often overlooked. Brendan Foht has shrewdly concluded that “the horror of the novel is not in the threat of violence from the creature, but in the boundless depravity of the man repulsed by the misery of the person he created in his own image.” Frankenstein is not confronted by an agent of divine justice. His monster confronts him as a creature confronting his creator. The horror of Frankenstein is Victor’s choice of control over care. Frankenstein is a tale of appallingly bad parenting.

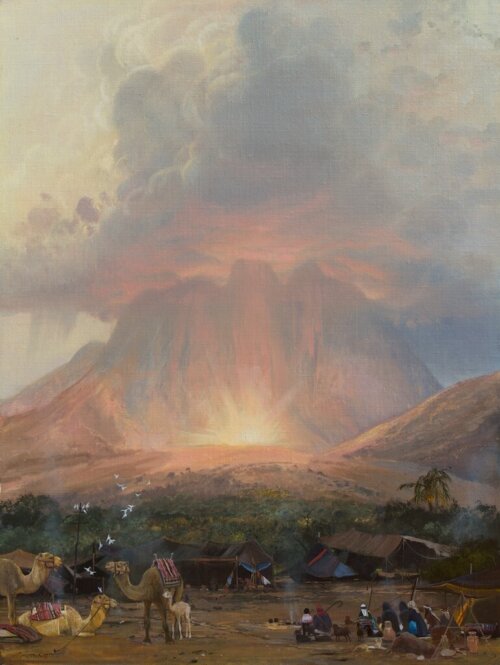

I think this brings into focus something essential about our relationship with technology. Whenever we are horrified by technology we are horrified by some aspect of homo faber. Because horror is only coherent as a response to human moral failure. If we were to put the point in theological terms, we might say that all coherent demonology is reducible to hamartiology (the study of sin). There is no substitute for learning to hate sin, and our efforts to dream up such a substitute inevitably lead to superstitious mythmaking. We imagine our technology to be capable of things that only we are capable of. We project divine agency onto that which doesn’t even qualify for human agency. Roko’s Basilisk, the hypothetical demonic AI that will punish all those who fail to aid in bringing about its existence, is, as has been repeatedly pointed out, a secular analogue of Pascal’s Wager. The problem, as ever, is bad theology. The twinned impulse of AI enthusiasm/alarmism, taken to its logical conclusion, becomes a kind of atheology, a fearful and rapturous contemplation of the void. This, it seems to me, overlooks one of the central facts of morality: cosmic indifference is nothing compared to human contempt. Or human indifference, for that matter.[iv] We just forget that ordinary human wickedness (i.e. sin) is worth fearing, probably because it’s just about the only thing that’s really worth fearing. This is why our most powerful and enduring myths all contain warnings against transgression, and why so much intellectual labour in our officially impious culture is devoted to propagandizing for transgression.

It’s too late, then, to think of Frankenstein as a cautionary tale, at least in so far as it’s a parable about Western civilization as a whole. Our sentimental hopes and fears about AI demonstrate the belatedness of such caution. Henry Kissinger appears alert to the danger of falling into such sentimentality, because alert to the tendentiousness of those assumptions about consciousness that govern most AI research (and which are scrupulously detailed and comprehensively rebutted in Hubert Dreyfus’s magisterial What Computers Still Can’t Do[v]). For instance, Kissinger describes AI as operating “by processes that seem to replicate those of the human mind”, where most writers would happily omit the “seem.” Kissinger also articulates with rare lucidity what is truly at stake in the AI debate:

“Ultimately, the term artificial intelligence may be a misnomer. To be sure, these machines can solve complex, seemingly abstract problems that had previously yielded only to human cognition. But what they do uniquely is not thinking as heretofore conceived and experienced. Rather, it is unprecedented memorization and computation. Because of its inherent superiority in these fields, AI is likely to win any game assigned to it. But for our purposes as humans, the games are not only about winning; they are about thinking. By treating a mathematical process as if it were a thought process, and either trying to mimic that process ourselves or merely accepting the results, we are in danger of losing the capacity that has been the essence of human cognition.”

The threat here is one that strikes at us as moral beings. It is not merely an existential threat; it is more horrifying than that. This is what Henry Kissinger perceives and Elon Musk doesn’t. Artificial Intelligence is to be feared not because it may turn its superior intelligence against us and condemn us to mere annihilation, but because it may redefine in appalling ways, on an individual as well as on a civilizational scale, what it means to be human.

The even more disquieting thought is that AI’s redefinition of humanity is a process that is already well underway. Kissinger’s article is called “How the Enlightenment Ends”. This begs a question. What if that the advent of AI is not the Enlightenment interrupted but the Enlightenment consummated?

Notes

[1] See Collateral. Dir. Michael Mann. 2004. The film contains, aside from an underrated performance from Tom Cruise, a great scene in which the everyman (Jamie Foxx as taxi driver Max) exposes the fraudulence of the debonair nihilist. It’s a subtle and powerful moment, this moment when a simple man sees with perfect clarity, and with righteous contempt, that a heartless killer’s steely disenchantment is really a form of ignorance. This is relevant to the discussion of horror below.

[2] This notion has a vague resemblance to one key aspect of that most voguish brand of philosophical posthumanism, accelerationism. And so much the worse, from an accelerationist perspective, that the resemblance is no more definite. I imagine that an accelerationist would have cutting things to say about my argument here, perhaps that I’m guilty of a failure to think through (with anything more than a superficial gesture at the insights of sophisticated philosophy with regard to post/modern politics and non/human consciousness) what it really means in a more than metaphorical sense for a human to be made a machine, or become an input in a computation. I would simply note that such criticisms must come from a place of fundamental disagreement with my premises. If it wasn’t clear already, I am operating on the (desperately uncool) assumption of the validity of a broadly humanist philosophy. I make no apologies for this assumption. I don’t see how it could jeopardise my argument.

[3] Our reinvention of Mary Shelley’s powerful tale (whose lineage can be traced back further than Faust, to the Golem of Prague, or further, to Milton’s Satan and to the biblical Adam and Eve, or further still, to Prometheus – recall Shelley gave her novel the subtitle A Modern Prometheus) continues to this day. Blade Runner and its sequel Blade Runner 2049 essentially tell the story of the race of monsters that Victor Frankenstein fears he will bring into existence if he gives his Adam an Eve. The replicants are, like Frankenstein’s monster, possessed of superhuman physical powers and inhabit a world of humans determined to ignore their shared humanity. (For a brilliant – if idiosyncratic – analysis of 2049, see Padraig Hythloday’s The Love Song of KD6-3.7. His discussions of the psychology of the film’s villain Niander Wallace, and of the peculiar relationship between the protagonist, K, and his AI girlfriend, Joi, is particularly germane).

[4] I know I’m opposing a very influential understanding of horror here. Lovecraftians make much of the fact that what’s truly monstrous, truly horrifying, is what’s unknown and unknowable, what’s categorically beyond human experience (see, for instance, Graham Harman’s Weird Realism: Lovecraft and Philosophy). But I’d argue that Richard Yates is a better horror writer than H.P. Lovecraft. Frank Wheeler’s complacency and negligence is far more horrifying than Cthulhu’s massive inscrutability. The other literary works I’d nominate as outstanding explorations of horror would also be by realist writers, writers such as Raymond Carver (e.g. “So Much Water So Close to Home”) and Alice Munro (e.g. “Child’s Play”) or, for horror with a more explicitly theological bent, Flannery O’Connor (Wise Blood), or even Saki at his least burlesque and most mordant. Another way of delineating the drift of my thought here would be to say I follow the late Stanley Cavell’s view that horror is “the perception of the precariousness of human identity,…the perception that it may be lost or invaded, that we may be, or may become, something other than we are, or take ourselves for” (The Claim of Reason, Oxford UP, 1979, pp.418-419).

[5] A key passage: “Human knowledge does not seem to be analysable as an explicit description…A mistake, a collision, an embarrassing situation, etc., do not seem on the face of it to be objects or facts about objects. Even a chair is not understandable in terms of any set of facts or ‘elements of knowledge.’ To recognise an object as a chair, for example, means to understand its relation to other objects and to human beings. This involves a whole context of human activity of which the shape of our body, the institution of furniture, the inevitability of fatigue, constitute only a small part. And these factors in turn are no more isolable than is the chair. They may all get their meaning in the context of human activity of which they form a part” (MIT Press, 1992, p.210).