AI and the Dehumanization of Man

Strong Artificial Intelligence is the idea that computers can one day be constructed that have the abilities of the human mind. The contrast is with narrow AI which is already with us – that is the notion that computers can be made that can do one thing very well, such as the Watson computer that won in Jeopardy, or Deep Blue that bet Kasparov in chess.

Strong AI, artificial general intelligence, would mean that a robot fitted with a computer brain could move around in the world as competently as a human. As F. H. George commented to the editor of Philosophy, 32 (1957), 168-169: “finite automata are capable of exhibiting, at least in principle, all the behaviour that human beings are capable of exhibiting, including the ability to act as poets or creative artists and even to wink at a girl and mean it.”[1] This reference to a wink itself has a poetic touch to it that captures a sense of genuine humanity.

Strong and narrow AI is the difference between an idiot savant who can do one thing incredibly well, such as recognizing prime numbers of incredible length,[2] reading two pages of a book simultaneously with over 90% recall like Kim Peek, and someone with enough nous to handle the wide range of tasks that any normal human being has to face; engaging in a lengthy conversation one minute and enjoying a work of fiction the next.

Rhetorically Minimizing the Machine/Human Divide

Since Strong AI is not a reality, computer scientists and philosophers who wish for its creation need to find ways to make their speculations plausible and convincing. A common rhetorical strategy adopted by those arguing that Strong AI might one day be a reality is to claim that people themselves are machines. In that way, Strong AI already exists in a sense. We are those machines, though the notion of Strong AI implies that an engineer or computer scientist could make one of these machines through something other than sexual reproduction.

This rhetorical ploy means talking up machines while talking human beings down in order to shorten the perceived distance between the two – significantly lowering the bar for meeting the burden of proof.

It is claimed that human beings have a meat machine in their heads that follows algorithms just as a computer does. What difference is there if one is made of silicon and copper, and the other carbon-based? Don’t be a biological chauvinist!, they implore.

Thus, to convince human beings that their minds are just Turing machines (computers), it is necessary to get people to have a worse and worse conception of their own abilities. Instead of demonstrating that computers are far more fantastic in their scope than one might have thought, they will try to persuade us that what people are doing when they think is far more machine-like and unremarkable than they ever realized.

An analogy might be with the movie Twins where the visual joke is that Danny DeVito and Arnold Schwarzenegger are supposed to be long lost identical siblings.

Strong AI proponents are trying to convince us that Danny DeVito is lot taller, muscular and handsomer than he looks and Arnold is shorter, weaker and generally far more pathetic than he is usually imagined to be. The 99 pound weakling and Charles Atlas are really the same.

It is a strange business because narrow AI; that is the only AI that actually exists, is so incredibly stupid by human standards

As Piero Scaruffi has pointed out, think about the difference between sitting through a computerized list of alternative reasons for which someone might be calling a call center and talking to a human operator with whom it is possible to get straight to the point. In the first instance, AI is asking that people dumb themselves down to its level in order to interact with it in a highly frustrating manner.

There are two ways of reaching the Turing point, the point where computers become indistinguishable from people – make computers smarter, or dumb people down to the level of machines. Computers as mere machines, rule-following devices, need highly structured environments requiring rules and regulations such as highly delineated roadways and signs everywhere for self-driving cars, and rules and regulations make people stupider.[3]

The other move, having talked people down and hopefully really dashed their self-conceptions, they will talk up computers by pointing to the completely imaginary future accomplishments of computers endowed with artificial general intelligence – the old, IOU that never gets cashed trick.

Having as Exhibit 1 something that does not exist is really a bit embarrassing. It amounts to saying “Boy, oh boy, oh boy, you are really going to look stupid when my walking, talking, poetry writing, winking robot comes in and steals your girlfriend.” Perhaps it might be called “the argument from Schadenfreude.” It has the benefit for them that we will all grow old and die before the bluff can be called, with the predicted “singularity” just being endlessly deferred.

These AI beings are in fact going to be superhuman, it is claimed, and our overlords. There is a definite wistful quality to these imaginings. Since most Strong AI proponents are atheists, the robots will be as close as they believe they might get to the divine. It is a desire for transcendence after all – just like the Neanderthal theists. Perhaps with suitable implants, rather than just kissing the hem of the overlord garments, they can actually be them – Charles Atlas after all.

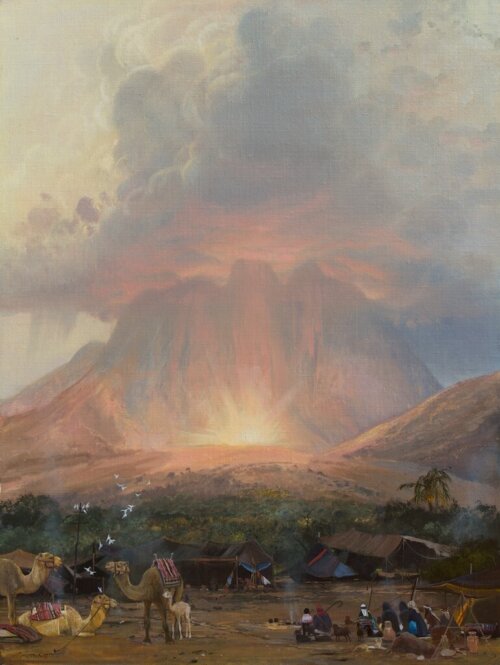

Inverting the Great Chain of Being

The Great Chain of Being is an ancient and cross-culturally ubiquitous idea that reality consists of a hierarchy with matter at the bottom and God at the top. Man is in the “metaxy” – the inbetween; neither gods nor brutes.

The Great Chain of Being can be found in Plato’s Cave and in Buddhism with the hierarchy of physical reality, psyche, nous (Forms), and the Form of the Good, or body, mind, soul and spirit.

Devout materialists invert this hierarchy and worship atoms and molecules as ultimate reality. This involves the higher admiring the lower.

This strange situation does not really get rid of God completely, nor mind. They are simply withdrawn from their proper place in the hierarchy and projected downward.

Materialists might actually be on to something. Metaphysics relies on intuitively derived axioms and materialists have one correct intuition. More than one religion posits God at both the top and the bottom of the hierarchy. In Christianity God describes himself as the Alpha and the Omega; the beginning and the end; the Creator who creates physical reality and sustains its being, and the endpoint of creation that then evolves, seeking its maker. Thus God is both “below” matter as its origin and ground of being, while “above” it too; as its telos and final destination. Materialists could be said to be intuiting the lower location of the divine, while rejecting the higher. Since life has to have some meaning to be bearable, materialists thus admire and seek the lower regarding it as superior and realer hence the admiration some of them have for computers.

A human being who admires “Alpha Go,” a computer that can do just one thing following a strictly rule-governed game, while the human can do thousands of things pretty well, and is vastly more intelligent, is just such a case in point.

Gnostics make the opposite mistake – seeking only the Omega and rejecting the Alpha. Plato’s Cave is, importantly, a circuit – with the philosopher exiting the cave but then reentering. In Christianity, God declares creation good while Theravada Buddhism denounces Form as an illusion with the goal of life to escape rebirth. Mahayana Buddhism, influenced by Greek thought, rejects this Gnostic attitude and embraces the marketplace; ordinary existence.

Materialists are committed to determinism. Logically, determinism means that the Big Bang is effectively the Deist Creator. All agency, free will, intelligence, rationality, decision-making, is withdrawn from humanity and attributed to the Big Bang who comes to have the attributes of God. The behavior of plants, animals and humans often seems patently intelligent, coordinated and purposeful, so intelligence and purpose must be properties of the Big Bang, since all creatures are deemed automatons with no free will. No one can be called “intelligent” if his thoughts and actions are all the product of someone or something else. God is banished from Heaven and the transcendent and projected downwards. But, crucially, He is not eliminated.

If the free will proponent counters that minds and brain states are not the same thing; that matter cannot be said to be logical or rational, let alone intuitive and emotionally sensitive, the determinist may claim that the free will advocate is begging the question. Matter can indeed instantiate properties of mind. But this just means matter is not just physics, biology and chemistry, but embodies mind – which is not something present in any of those sciences. The properties of mind are denied to a nonmaterial mind and are shoved down one level to physical reality. But this entails that physical reality is not at all as it is ordinarily conceived. Physical reality on this view actually thinks, reasons, has concepts, is moved at beautiful music, loves its children, and wonders about its own existence. None of those things can be reduced to non-thinking mindless physical mechanisms studied by science. Such a view is more consistent with panpsychism – the notion that mind underlies all aspects and levels of complexity of the universe.[4] It could be that God is in all, through all and above all. Likewise, Strong AI proponents try to take human consciousness and shove it down into computer operations while denying anything special is going on in actual humans.

Atheist, materialist, biologists like Richard Dawkins also engage in reverse Gnosticism – rejecting the higher and worshipping the lower. The same rhetorical dynamic as Strong AI apologists plays out in his writing. For Dawkins, humans are stupid dupes of their Machiavellian scheming genes. We are “lumbering robots” – an interesting turn of phrase – implying Dawkins is clearly no fan of Strong AI. According to Dawkins, humans hope and plan, fall in love and have sex, and try to find meaning, while all along we are being used by our genes for their own propagation. This implies that it is in fact, the lower, that has real sentience, purpose and intention and it has succeeded in using the higher for its own ends.

Rupert Sheldrake, former director of cell biology at a college at Cambridge University, points out that this point of view is incoherent and rhetorically untenable. It is said that cell replication involves DNA and elements of the cell “reading,” or “following” the coded “information” in the DNA. This process is supposed to be physical – although also requiring that mysterious thing: “life.” And yet this description of cell replication involves the metaphors of “reading,” “following” and “information” which are mind-dependent. It is an anthropomorphic projection intended to illuminate physical processes. The projection continues when Dawkins compares genes with gangsters.

“The DNA molecules are not only intelligent, they are also selfish, ruthless and competitive, like “successful Chicago gangsters.” The selfish genes “create form,” “mould matter” and engage in “evolutionary arms races”; they even “aspire to immortality.”” Genetic programs are supposed to be “purposive, intelligent, goal-directed.” By contrast, human beings are “lumbering robots” manipulated by scheming genes to do their bidding.[5]

For Dawkins, human purposes are “purposes,” meaning irrelevant shams subordinated to genes. Yet, on his view, genes have real purposes that actually come to fruition and correspond to the real state of affairs. However, when questioned, biologists backtrack and admit that these things are metaphors. Sheldrake writes that “most biologists will admit that genes merely specify the sequence of amino acids in proteins, or are involved in the control of protein synthesis. They are not really programs; they are not selfish, they do not mold matter, or shape form, or aspire to immortality.”[6]

Biologists like this take human characteristics away from humans and push them one level down, explaining the unfamiliar world of microscopic genes by appealing to everyday human experiences, but then claim that everyday human experience is mistaken and genes rule the roost. Other biologists might instead appeal to evolution as the Prime Mover with similar results. Determinists too claim that our experience of having free will, reasoning and agency are illusions but then attribute them, effectively, to the God-like Big Bang.

How do we understand the agency of the Big Bang if we have never experienced agency? How is it helpful to describe purposes, intentions, reading, following instructions at the microscopic level if humans are not really doing any of these things in any meaningful way? Explanation involves explicating the unfamiliar in terms of the familiar, but this mode of thought wants to kill off the familiar once it has done its job.

Likewise, for the Strong AI proponents, if all human thought is algorithmic, and thus something attainable by computers, we are not doing what we seem to be doing. If rule-following is all that is going on, then machines are likely to be better and faster rule-followers than we are.

However, Gödel’s Theorem proves that thought cannot be completely mechanized even for multiplication.[7] Alan Turing’s identification of the halting problem establishes that a general intelligence machine cannot be built for discovering algorithms or testing them.[8] Computer programmers must exercise great ingenuity and effort to construct complex algorithms. It would certainly make their lives easier if there were an algorithm to do this!

Strong AI anticipates that computer intelligence will far surpass the human and become God-like. Since computers are inanimate, it would be a matter of the physical ruling over the living, the lower conquering the higher and thus a simple inversion of the Great Chain of Being.

People like Ray Kurzweil even fantasize about downloading their consciousness into a computer and attaining immortality that way – a religious aspiration if ever there was one. The living Kurzweil aspires to the level of dead matter. Dead things having the advantage that they do not die.

It seems that humans cannot live without hierarchies and need something to worship. If God is rejected as unworthy or nonexistent, a substitute will inevitably be found even if it means turning everything upside down.

Emergent Complexity – Consciousness and Life

Thomas Nagel, on the outer edges of what is regarded as acceptable among materialist, atheist, analytic philosophers, argued for panpsychism and consciousness as being as fundamental to reality as matter, in Mind and Cosmos – a fairly bland and tepid book that apparently generated a lot of heat from analytic philosophers for being heretical.

Consciousness either permeates all material existence all the way down to individual atoms, or it suddenly springs into existence when matter is organized complexly enough. The possibility of Strong AI rests, it seems, on betting that consciousness is an emergent phenomenon, rather than being a matter of degree. That, it may well be, is the wrong bet.

There seems to be some connection between consciousness and life. Computers are not alive so this may also stop them from becoming conscious.

Regarding Machines as Superior to Humans

Having experienced WWI, the author and soldier Ernst Jünger fell in love with the terrifying mechanized landscapes of war. He felt like he was being confronted with a superhuman reality of machines – cold, merciless, overwhelming, irresistible, oblivious, and deadly. He, miserable flesh and blood and mortal, was a nothing. The machine was stronger, did not get tired, was louder and superior.

If not a confrontation with God, the battlefield was at least akin to the battles of the Greek gods with massive projectiles being hurled into the sky; implacable tanks, barbed wire, machine guns, mortar fire and artillery; perhaps even a few planes dropping bombs. It was a case of being awestruck by a new kind of futuristic reality. Jünger regarded it as sublime.

Strong AI proponents seem to have a similar reaction to a wholly imagined machine-dominated future. They look at those puny cellphones and laptops and imagine the new machine reality; at once claiming that people are already indistinguishable from machines, in which case the future is here, while also puffing up the forecasts of yet to be beheld computational wonders.

Since we have no idea how consciousness is possible, endowing a machine with sentience is a rather ambitious goal. Even materialists have been known to acknowledge that how consciousness and subjectivity could be generated from purely physical processes is the “hard problem.”

One way that philosophers have described the difference between those with minds and mindlessness is that when it comes to creatures with minds there is something it is like to be that creature. This stems from Thomas Nagel’s famous “What is it like to be a bat?” article.

Clearly, as of now, there is nothing like being the circuits and software of a computer, other than dead.

Strong AI Fantasists Exclude the Necessarily Vague and Intuitive Aspects of Consciousness

Human beings have two modes of consciousness related to the left and right hemispheres of the brain.[9] Rhetorically, the Strong AI camp is committed to emphasizing the left hemisphere because the LH is the more robotic of the two. The left hemisphere permits narrow focus and the ability to concentrate on one thing at a time. It is also the region associated with concepts, the inanimate, the known, the man-made, machines, abstractions and speech.

The right hemisphere deals with broad, contextual awareness and the immediate experience of the life world. It favors living things, novelty, uniqueness, gestalt comprehension (big picture thinking), and problem-solving. The RH is also associated with emotion and understanding emotional expression, both facial and verbal. Learning a new skill begins with the RH. Once mastered to the point that active thought is not required, it becomes a relatively automatic function of the LH.

The more the right hemisphere is emphasized, the more implausible Strong AI is likely to seem. A Star Trek Next Generation episode “Data’s Day” #85 deals with the android Data and his inability to comprehend human emotional life. Despite his protestations that he has no emotions, he clearly does. It is just that his emotions have no more sophistication than a small child’s. This is no interpolation. At one point in the episode Data claims he is incapable of feeling apprehensive only for Data himself later to notice that he is tapping his finger nervously.

Without this rudimentary emotional understanding it would seem that Data would be incapable of appreciating anything at all about emotions. An analogy would be trying to explain sight to the blind from birth.

The writers of “Data’s Day” demonstrate a nice insight into what is missing from an exclusively left hemisphere approach in a way absent from Strong AI proponents’ speculations.

Since the RH deals with novelty and the anomalous it must be fluid and flexible and cannot rely on what is already known. Omniscience might provide the ability to know the correct response to every life-situation, but no finite creature has infinite knowledge. Therefore, every living creature is routinely confronted with the unknown and must improvise an appropriate response. This ability to respond pretty well to hugely varied circumstances gets called “general intelligence.” This kind of thinking will include the intuitive and informal. It is this aspect of the life of the mind that it seems especially unreplicable by a man-made mechanism.

The RH is experiential, the LH theoretical. Strong AI needs to incorporate both modes of consciousness to succeed.

A thinker must straddle both hemispheres to have anything insightful to say about the human situation, what it is to be a human being, emotion, meaning, the nature of mind, ethics, beauty and the life world. Epistemically, these things involve ostension, which means showing, exhibiting, pointing at. They simply cannot be made fully explicit. Plato, the father of Western Philosophy, was great at all this.

Those whose eyes light up at the prospect of machine intelligence tend to be those with the most impoverished conception of what a human mind is.

Most proponents of Strong AI imagine that human beings employ algorithms like computers do but that people are just “more complicated.” Thus, once computing gets sufficiently complicated, computers will gain artificial general intelligence. Algorithms, however, are strictly LH phenomena.

Aristotle argued correctly that there can be no such thing as a good (LH) moral theory – a theory that provided instructions about how to behave ethically in every given situation – because such behavior would need to take account of the exact characteristics of the people involved and of the precise circumstances.

Therefore there is no algorithm for producing moral behavior. Moral theories like utilitarianism[10] only attempt to produce general heuristics, broadly described goal-driven rules of thumb, and those heuristics turn out to be abominations far more likely to do harm than otherwise.

Even little children have a sense of fairness, but they have no theoretical explanation of it and neither does anyone else. Reciprocity is fairness. If it is claimed that this idea has been biologically selected and that is all, then “fairness” and thus morality does not exist. It has been explained away.[11] Similarly, the objective existence of beauty sometimes gets derided as purely a matter of taste or arbitrary preference.[12] In fact, there is widespread agreement about many beautiful things. One day old babies look longer at pretty faces – faces that nearly all people of every race also agree are beautiful.

These things are real. They are just not susceptible to precise definitions and as such are largely outside the realm of the LH. Without a sense of morality and beauty, AI would be monstrous.

Even someone with as much affection for sufferers of autism as Sam Harris has noticed that trying to write sets of instructions for imaginary generally intelligent computers to stop them killing everyone, for instance, as a way of ridding the world of cancer in humans would be impossible. Or at least he seems to have noticed that it would be extremely difficult – though, impossible is actually the correct answer.

Can a New Epistemology Help in the Creation of Strong AI?

David Deutsch in Creative Blocks acknowledges that computers are nothing like human minds though he thinks the laws of physics imply artificial intelligence must be possible. He takes it for granted that it cannot have anything to do with having a soul (haha, good one!) hence his appeal to physics. He writes:

And in any case, AGI cannot possibly be defined purely behaviourally. In the classic ‘brain in a vat’ thought experiment, the brain, when temporarily disconnected from its input and output channels, is thinking, feeling, creating explanations — it has all the cognitive attributes of an AGI. So the relevant attributes of an AGI program do not consist only of the relationships between its inputs and outputs.

The upshot is that, unlike any functionality that has ever been programmed to date, this one can be achieved neither by a specification nor a test of the outputs. What is needed is nothing less than a breakthrough in philosophy, a new epistemological theory that explains how brains create explanatory knowledge and hence defines, in principle, without ever running them as programs, which algorithms possess that functionality and which do not.

At best this aspiration has the sweetness of the ingénue and neophyte. Such adorable innocence! Professor Deutsch should be urged to look again at the jejune nonsense that is modern epistemological theory. Three thousand years of attempts to explain and define knowledge have revealed the impossibility of defining “knowledge” with any kind of precision; i.e., the necessary and sufficient conditions for legitimate knowledge claims. Anybody with any vague familiarity with it will know that the idea that “what is needed is nothing less than a breakthrough in philosophy, a new epistemological theory that explains how brains create explanatory knowledge” has a pigs can fly quality to it. Anyone reading this is urged to check out “epistemology” in any online encyclopedia of philosophy to take a look at what passes for modern epistemological theory. If one more such theory succeeds in the way that Deutsch envisions it, it would be the equivalent of a knock-down syllogistic proof of God’s existence or nonexistence, of settling the question of free will once and for all, or definitively determining the meaning of life or its lack of meaning. In other words, the very nature of reality and the human condition will have ceased to exist as we know it. But, please Dr. Deutsch, be my guest!

Deutsch’s use of the word “brain,” instead of “mind,” suggests a mighty confusion. A brain is a lump of meat. Whether it has succeeded in producing “knowledge” is going to be a question for minds to assess. Brains are physical objects whose properties can be studied from the outside without necessarily revealing its thoughts. “Knowledge” is conceptual and concepts live in the realm of the abstract. That is why two people can know the same thing though their brains be quite different. Epistemological theories are conceptual. If it is brains he is concerned with, then it would need to be a neurophysiological theory he is asking for, and then having come up with that, it would need to be correlated with an epistemological theory – most of which are garbage with almost no shared agreements among thinkers whatsoever, so again, good luck with that!

We need knowledge. We rely on it. We clearly believe in it. It is even a contradiction to claim that knowledge is not possible because this implies it is known that knowledge is not possible. It is a perennial philosophical topic that has no precise answer. Roger Penrose’s claim that insight into mathematical truth involves the mind contacting the Platonic world of Forms is an appealing one; unfortunately, not one that can be programmed into a computer or reduced to an algorithm – which is the same thing.

Every attempt to define what counts as knowledge has failed. Autistic types, and materialists resembling them more by metaphysical conviction than brain deficiencies, might be willing to give up on beauty and morality, but presumably relinquishing claims to knowledge would be a step too far. There is absolutely no reason at all to think that a definition of knowledge will ever exist that is more than speculative.

Knowledge is as likely to get nailed down as a strictly definable concept such that there would be a definitive test for whether something counted as “knowledge” or not, as are beauty and goodness. For these reasons, all knowledge, goodness and beauty will never be the subjects of algorithms.

Strong AI’s Conception of Algorithms has Platonic implications

Strong AI claims that minds are algorithms and that algorithms produce minds. It also claims that how the algorithms are instantiated is irrelevant – it matters not if cogs, wheels, brains, computers, or water pipes, are involved. Roger Penrose points out that this means that Strong AI proponents are dualists.[13] According to them, algorithms are abstractions that can have any number of concrete realizations. They have a nonmaterial existence. Thus, Strong AI relies on Platonic Forms – the very sort of thing, like souls, with which they are most keenly interested in dispensing.

Strong AI was proposed on materialist assumptions but then propose algorithms that preexist any mind. But then, algorithms need mind to exist. They are purely conceptual after all; not physical. They are simply information. Arrangements of iron oxide on a piece of tape is not itself information until it is decoded; ink on a page is nothing until it is read. It is not possible to follow instructions if it is not known that there are instructions to follow.

Machine Learning?

Following algorithms does not require real understanding. The instructions are provided by the programmer and the machine does what it is told. The phrase “machine-learning,” however, sounds like some way out of this dictatorship of the programmer.[14]

With bottom up algorithms a procedure is laid down for the machine to “learn from experience.” The system must be run many times, performing its actions on a continuing input of data with the rules of operations being continually modified in response.

The goal of this “learning” has been clearly set in advance, e.g., to identify a person’s face, or a species of animal. After each iteration an assessment is made and the system is modified with a view to improving the quality of the output. This assessment requires that the correct answer is known in advance.

But “the way in which the system modifies its procedure is itself provided by something purely computational specified ahead of time.”[15] This is why the system can be implemented on an ordinary computer.

Penrose writes that the key distinguishing feature of bottom up programming as opposed to top down is that “the computational procedure must contain a memory of its previous performance (‘experience’), so that this memory can be incorporated into its subsequent computational actions.”[16] It should be noted that mistakes and machine learning are inextricably interlocked. Without “mistakes” the system could not function. It is only with top down programming that computers have significantly outperformed humans such as numerical calculation or calculating games like chess.

Machine learning does not really change anything and neither does parallel versus serial processing. Whether computational actions are performed one at a time or a task is divided into sections and the sections are tackled simultaneously makes no philosophical difference.

Roger Penrose provides a pithy summary of why artificial “intelligence” is a misnomer. There is no intelligence without understanding, and understanding requires awareness.[17] An operational definition of “understanding” is not sufficient because, for instance, a mathematical algorithm can be followed with or without understanding. Only the person who understands would be able to formulate a new algorithm for achieving the same task since the person in the dark does not even know the purpose of the algorithm.

Computer programs, as products of human intelligence, can thus appear to be intelligent themselves. But effective computer simulations almost always are exploiting some significant human understanding of the underlying mathematical ideas. Computer algorithms that can differentiate knotted string from simple heaps depend on very complex and recently developed (twentieth century) geometric ideas – though a person can often test the string with simple manual manipulation or common sense.[18]

The Important Difference Between Actual Major Scientists and Strong AI Proponents

The major figures of science and physics tend to be religious and mystics. Isaac Newton and Einstein believed in God and Newton in particular took an immense interest in religion. Leonhard Euler too. Schrödinger, Heisenberg, Bohr, Eddington, Jeans, Pauli, Planck were all very conscious of the limits of physics to describe reality. They knew that restricting analysis to things that can be measured, while very helpful for physics, omits most of what is truly significant and they considered it important to highlight this fact. Wittgenstein captured this view when he wrote that the meaning of the world lies outside it. By “the world” he meant the empirical world of facts.

It is a very strange phenomenon that those who have achieved nothing of significance look at that long list of the truly great physicists and claim that they are all out of their minds. It would seem logical to think that maybe they had something right in order to make such astounding breakthroughs.

The same kind of small-mindedness can be seen in the English professor who claims she is going to school Shakespeare and Dostoevsky and convict them of some political error. She has it exactly reversed the order of who should be learning from whom.

To look at the greatest achievements of the human race and to think that one knows better than their originators is an exercise in resentment, envy and total self-delusion.

Stephen Hawking is notable as a counter-example to this claim; as a genuinely productive physicist who shared the perspective of the anti-spiritual crowd. He also believed that philosophy is now defunct – though, given the quality of thought often coming out of philosophy departments he might be largely right about the merits of at least modern philosophy!

It should be noted that arguments against philosophy are themselves philosophical. In fact, some of the best philosophy criticizes philosophy. But philosophical arguments against, say, metaphysics are just epistemology – another field of philosophy.

Those ignorant of past philosophy are doomed to reinvent it and to repeat old mistakes. Hawking had not broken free of prior philosophies – he just engaged in the banal and tepid assertions of the woefully uninformed – comparable to a music student who refuses to study “old, “defunct,” music in order to be wonderfully original and just comes up with the same old junk that any novice can be expected to produce, paining the soul of his long-suffering music professor.

Sheldrake comments that Hawking held a certain fascination for the public because of his wrecked body. He seemed reduced to a disembodied mind, free only to think, but not to move about in the world under his own steam.

As a quote from Time magazine on the cover of his bestselling book, A Brief History of Time (1988), put it, “Even as he sits helplessly in his wheelchair, his mind seems to soar ever more brilliantly across the vastness of space and time to unlock the secrets of the universe.” This image of disembodied minds at the same time harks back to the visionary journeys of shamans, whose spirits could travel into the underworld in an animal form, or fly into the heavens like a bird.[19]

He could perhaps be compared with the much more appealing (to me) Albert Einstein whose wild hair and avuncular look seemed emblematic of the eccentric but lovable genius. The fact that Hawking could only talk through a computerized “voice” would surely endear him to those hoping to join their souls to an inanimate machine, to download their minds into a computer, or kneel before the new computer overlords.

Notes

[1] Stanley Jaki, Brain, Mind, and Computers, p. 198.

[2] Oliver Sacks, The Man Who Mistook His Wife For a Hat, chapter 23.

[3]From a talk by Piero Scaruffi called “Intelligence is not Artificial.” https://www.youtube.com/watch?v=CTof1qKMbNU&feature=youtu.be&fbclid=IwAR0m8tUHBwEX8R8cyX0-gzmRSd0PL2T0B6LKk-6FBIWm13x-2b3OicptlWQ

[4] See my article “The Illogicality of Determinism: Further Considerations” here for the full argument. https://sydneytrads.com/2016/10/28/richard-cocks-4/

[5] Rupert Sheldrake, Science Set Free, pp. 47-48.

[6] Ibid, p. 48.

[7] https://orthosphere.wordpress.com/2018/05/19/godels-theorem/

[8] https://orthosphere.wordpress.com/2018/05/17/the-halting-problem/

[9] For more on this topic: https://orthosphere.wordpress.com/2018/05/23/chaos-and-order-the-right-and-left-hemispheres/

[10] https://orthosphere.wordpress.com/2018/10/09/utilitarianism-a-new-kind-of-evil/

[11] http://peopleofshambhala.com/darwin-vs-morality-trying-to-find-a-biological-basis-for-morality/

[12] https://sydneytrads.com/2017/10/23/richard-cocks-10/

[13] Roger Penrose, The Emperor’s New Mind, p. 429.

[14] This explanation is based on Roger Penrose, Shadows of the Mind, pp. 18-19.

[15] Ibid, p. 19.

[16] Ibid.

[17] Roger Penrose, pp. 38-39.

[18] Penrose, p. 60.

[19] Sheldrake, p. 294.